A new product has just been introduced by an online merchant. In order to understand the effects of new product launches, they want to use data to assess and improve marketing channels, track and test website conversion performance, and measure website performance.

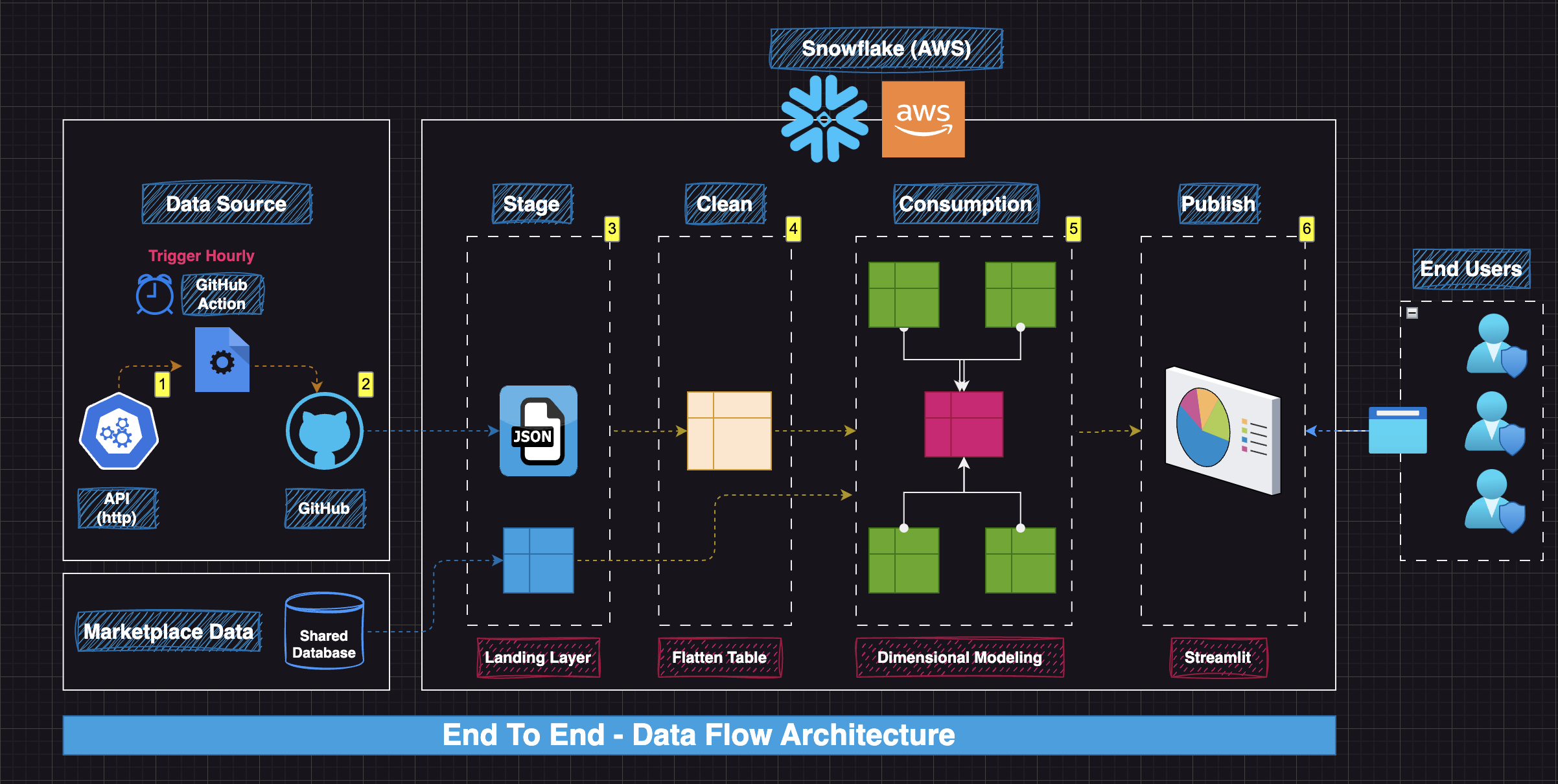

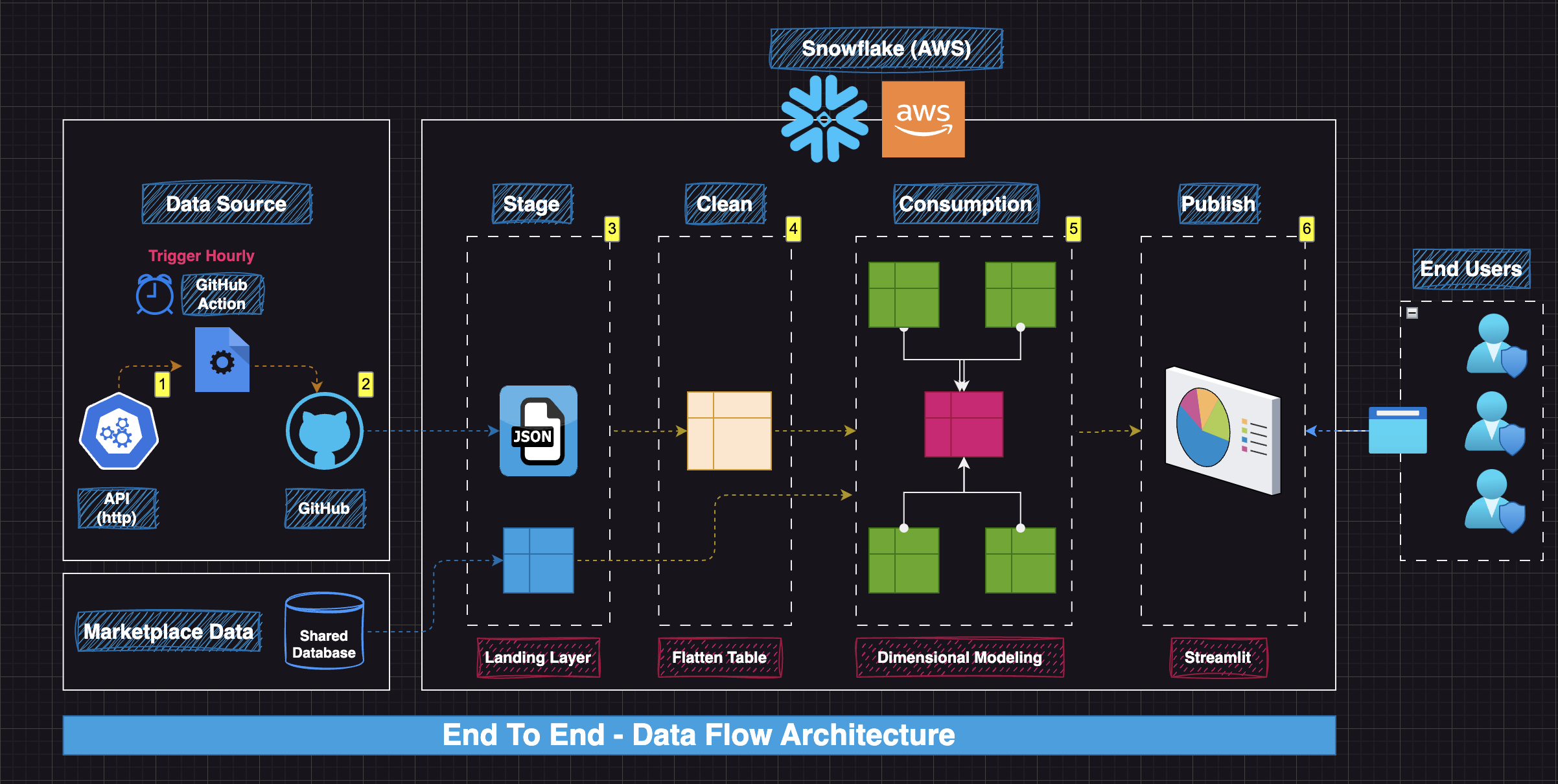

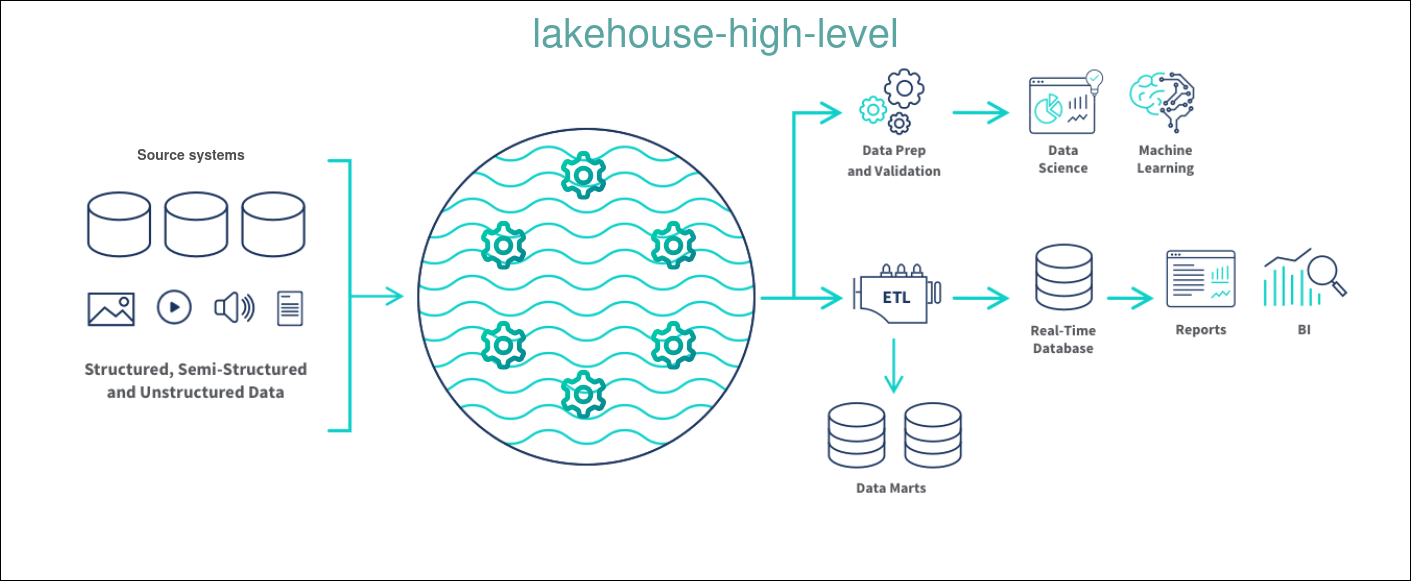

An organization wants to build a centralized data platform that aggregates global Air Quality Index (AQI) data from multiple monitoring stations and supports an interactive dashboard, allowing users to explore both real-time and historical air quality and pollutant levels with ease.

This solution empowers environmental analysts, policymakers, and the public to make data-driven decisions by providing accurate, time-based insights into air quality trends and pollution patterns worldwide.

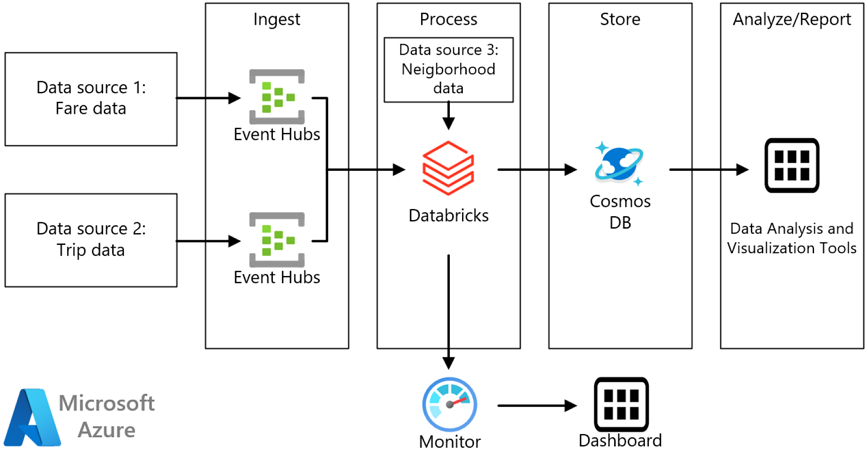

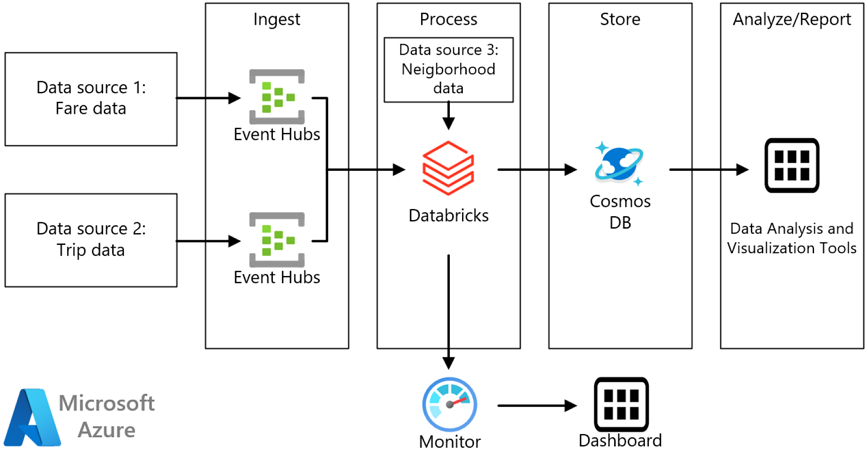

A taxi firm records information regarding each cab ride. In this example, we suppose two distinct devices are sending data. The taxi features a meter that transmits data about each ride, including the length, distance, and pickup and dropoff locations. A separate device receives customer payments and transmits fare information. The taxi business aims to compute the average tip per mile traveled in real time for each neighborhood to spot ridership trends.

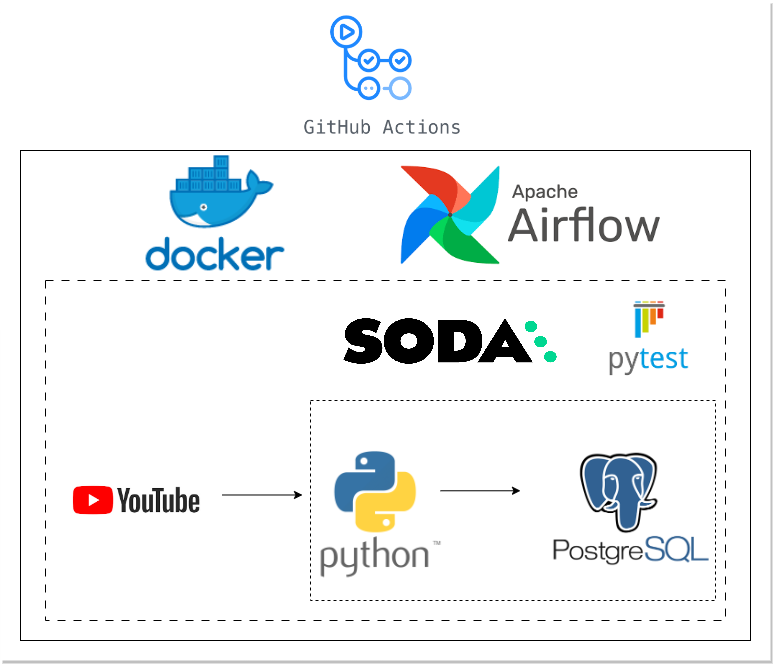

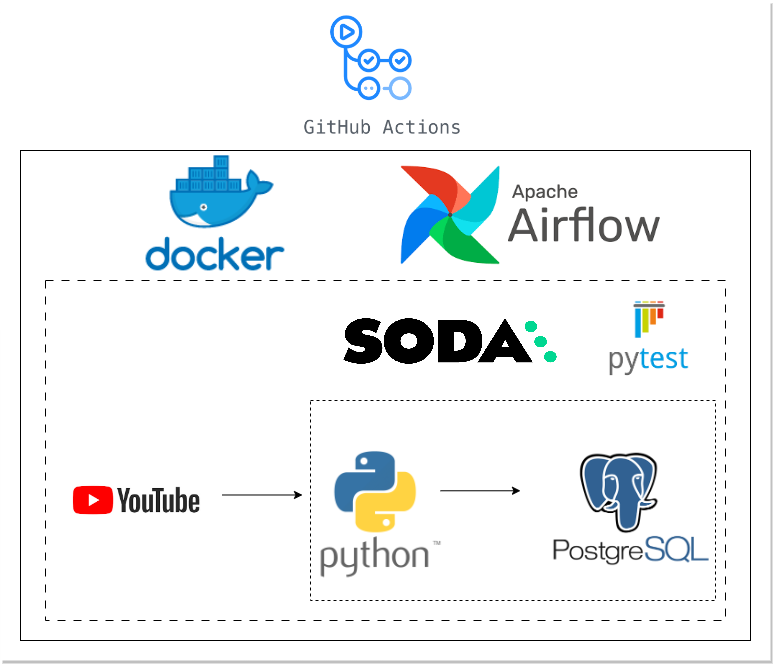

The objective of this project is to build a production-grade ELT data pipeline using modern data engineering tools such as Python, Docker, and Apache Airflow. The pipeline is designed with a focus on reliability and scalability, incorporating best practices in unit testing, data quality validation, and CI/CD automation to ensure seamless integration and continuous delivery across environments.

E-commerce businesses generate vast operational and clickstream data from orders, interactions, and marketing events, often siloed and inconsistent across formats and speeds. This project builds a modern end-to-end data platform that seamlessly ingests, processes, models, and serves both operational and clickstream data. By combining batch and streaming data engineering patterns, it enables near real-time analytics and scalable data processing. The platform supports automated data ingestion, transformation, and modeling workflows—empowering e-commerce teams with timely insights for performance tracking, personalization, and strategic decision-making.

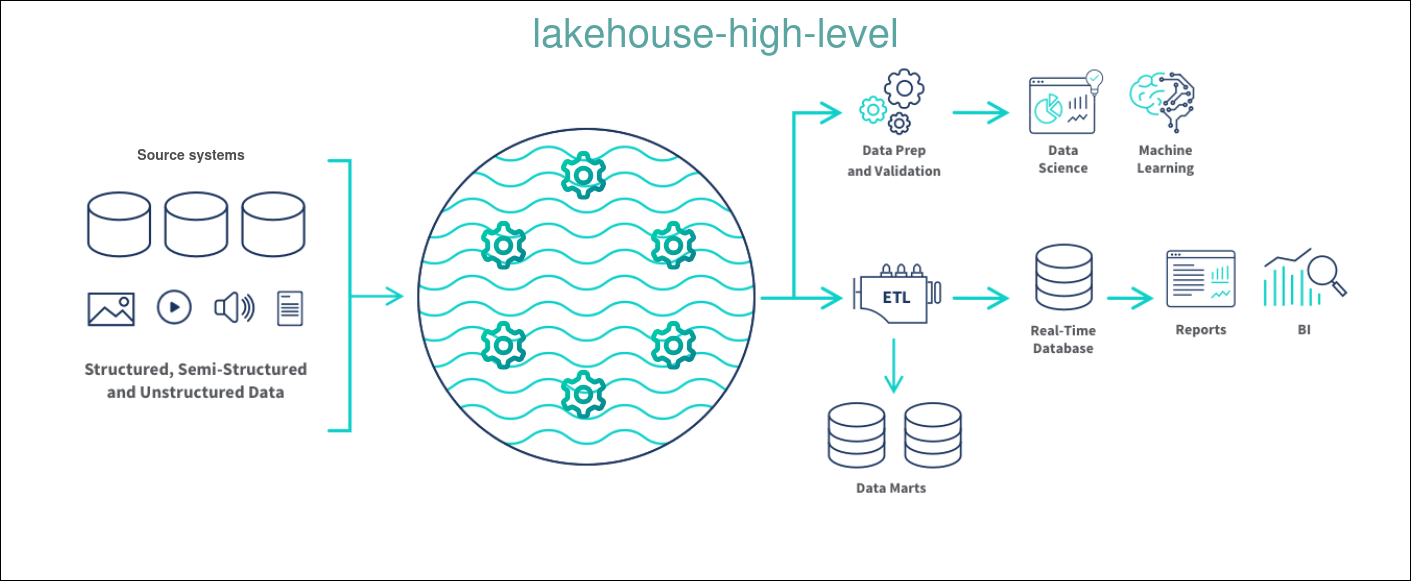

The increased covid-19 motility rate in Europe has been ascribed to population aging. Our data science team intends to create predictions using data from the population grouped by age group in each European country puplished in Eurostat website. We must ingest this data into the data lake so that the machine learning algorithms can utilise it.